Tag, You're Malware: Building a Malware Scanning Pipeline with AWS + VirusTotal

I built a real-time malware detection pipeline on AWS using Lambda, S3, and VirusTotal complete with S3 tagging, CloudWatch alerts, and GitHub Actions CI/CD.

Welcome Back Strangers 👋

It’s been about a month since my last blog posts and I’d to think I’m becoming more consistent than I was before! As you know from my last blog post, I have developed an interest in the Cloud and AWS during my pursuit of cybersecurity. After the completing the Cloud Resume Challenge, I brainstormed how I can tie in security into AWS and after a week I came up with this project! I built it using Lambda, S3, Terraform, and the VirusTotal API. This is all automated and triggered when a file is uploaded to an S3 bucket.

My goal was to simulate how organizations might handle malicious file uploads in production environments. I grew it into a threat response system that has structured logging, S3 object tagging, and Cloudwatch alerts. In this post, I’ll go over how I built it, the problems I encountered, and how I automated the entire process using Github Actions!

Here’s a diagram overview of the project for your reference. If you think it can be improved let me know please!

Key Components of the Pipeline

S3 Trigger + Lambda 🔗

The pipeline begins when a file is uploaded to a specific S3 bucket, in this case its abel-malware-upload-dev-1. This triggers the AWS Lambda function, which downloads the file to the /tmp directory and prepares it for analysis through VirusTotal.

Add Image showing the lambda function

VirusTotal Integration 🧠

The lambda function uploads the file to VirusTotal using the API. It is then polled for a verdict Malicious, Suspicious, Clean using the returned analysis_id. Once the analysis has been completed, the function extracts the scan stats from VirusTotal and determines the final verdict.

1

2

3

4

5

6

7

8

9

10

11

12

13

upload_response = requests.post(

"https://www.virustotal.com/api/v3/files",

headers=headers,

files=files

)

upload_data = upload_response.json() #parsing json response from virustotal into python dict.

analysis_id = upload_data["data"]["id"] #Grabs id from response to look up later

As shown above, the file is sent to the API and it returns a response that we parse into the analysis_id variable.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

for i in range(5): # for loop for api call and scan status

response = requests.get(f"https://www.virustotal.com/api/v3/analyses/{analysis_id}", headers=headers)

json_data = response.json()

status = json_data["data"]["attributes"]["status"]

if status == "completed":

stats = json_data["data"]["attributes"]["stats"]

break

time.sleep(10)

Now, that we have the analysis ID of the file we can send it over to the API for analysis. I made sure to give it enough time to return a response. Once a response is returned it will then be saved in the stats variable. This is later used to determine a final verdict as shown below.

1

2

3

4

5

6

7

8

9

10

11

if stats["malicious"] > 0: #Logic for Verdict

verdict = "malicious"

elif stats["suspicious"] > 0:

verdict = "suspicious"

else:

verdict = "clean"

S3 Object Tagging 🏷️

Based on the verdict, the lambda function will tag the S3 object with a key like:

1

"verdict": "malicious"

This allows for later filtering or quarantine actions that can be implemented later! I will probably implement this at a later time, gotta make sure the bad files go to jail.

Structured CloudWatch Logging 📊

All the verdicts are logged to CloudWatch in a structured JSON Format:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

{

"event": "virus_scan_completed",

"bucket": "abel-malware-upload-dev-1",

"key": "eicar.txt",

"verdict": "malicious",

"stats": {

"malicious": 61,

"suspicious": 0,

"undetected": 6,

"harmless": 0,

"timeout": 0,

"confirmed-timeout": 0,

"failure": 0,

"type-unsupported": 9

}

This makes it easy to read, create metrics, alerts, and audit trails. Mainly for readability because I hate reading json that has no structure or all jumbled up.

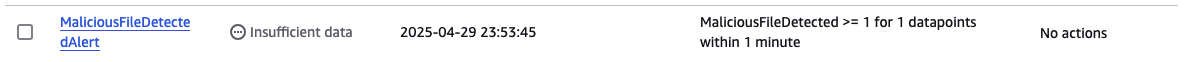

Cloudwatch Alarm 🚨

I have a CloudWatch log metric filter that is constantly watching for logs containing "verdict": "malicious" and triggers an alarm if it is detected. This can also be extended to alert via email, Slack, Discord, and several other messaging platforms.

Github Actions CI/CD ⚙️

All the infrastructure is managed through terraform, and a Github actions workflow that handles packaging the lambda, installing the neccessary dependencies (requests), and running terraform apply to each push done to the main branch. Below is my deploy.yml showcasing all the jobs set up to fully automate the process!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

name: Deploy Malware Scanner Pipeline

on:

push:

branches:

- main

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout Code

uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.12'

- name: Install and Package Python Dependencies

run: |

mkdir -p package

pip install requests -t package/

cp lambda/scanner.py package/

cd package

zip -r ../scanner.zip .

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: $

aws-secret-access-key: $

aws-region: us-west-2

- name: Set Up Terraform

uses: hashicorp/setup-terraform@v2

with:

terraform_version: 1.6.6

- name: Terraform Init

run: terraform init

- name: Terraform Apply

run: terraform apply -auto-approve -var="virustotal_api_key=$"

Challenges, Decisions, and Fixes 🧱

S3 Notification Race Condition (NoSuchBucket) ⚠️

The first major hiccup I hit was Terraform failing to attach an S3 Bucket notification for Lambda:

1

Error: NoSuchBucket: The specified bucket does not exist

This happened despite the bucket being declared in the same plan. The final verdict was that terraform tried to attach the notification before the bucket was fully registered.

To fix this wonderful error took some time to understand and eventually I had to separate it into two stages. I applied the bucket then I added the notification in a second apply.

IAM Permission Errors 🔒

I learned that IAM doesn’t allow Lambda to download/tag S3 objects by default. I learned real quick from the IAM Permission errors that plagued my screen. I had to give it permission by doing:

1

2

3

4

5

"Action": [

"s3:GetObject",

"s3:PutObjectTagging"

"s3:ListBucket"

]

and:

1

2

3

4

Resource = [

"arn:aws:s3:::${var.upload_bucket_name}",

"arn:aws:s3:::${var.upload_bucket_name}/*"

]

Packaging Dependencies for Lambda 📦

I chose to use the requests library for calling VirusTotal. Lambda doesn’t include it by default (unless im wrong), so I had to do the following:

1

2

3

pip3 install requests -t package/

cp scanner.py package/

zip -r scanner.zip .

Now, that everything is automated with Github Actions workflow, I don’t need to manually run these anymore! It was mainly used as I was writing and testing the function.

Polling and Timeouts 🔁

Patient is a virtue. VirusTotal scans are not instant and require patients and time. I needed to poll the analysis status every few seconds until I recieved a “completed” from the JSON response. The default lambda timeout of 3 seconds was too short so I bumped it up to 10 seconds and up to 5 retries:

1

2

3

for i in range(5):

...

time.sleep(10)

Testing with EICAR 🧪

To test the malware flagging, I uploaded an EICAR test file which is safely flagged as malware without being malware. It worked well and triggered the entire pipeline:

- CloudWatch Metric Filter

- Alarm

- Tagged the file as

verdict = malicious

What I learned 🧾

Conclusion

The project started as a way to simulate how security teams might detect and respond to malicious file uploads in real time. I learned so much about:

- Event Driven security automation using AWS

- Polling external APIs from Lamda

- Managing infrastructure and secrets securely with the use of terraform and Github Actions

- Troubleshooting pesky cloud issue such as IAM permission errors and S3 Race Condition.

There’s still more I’d like to add like a quarantine bucket or DynamoDB logging, however I have a working and automated malware scanning pipeline that deploys on push and alerts me on each upload. For now this a great foundation that I can build upon in the future as I keep expanding my knowledge and skillset. Keep an eye out for more projects to come! If you have any questions feel free to contact me through my socials or email!